Your comments are well taken, but I would differentiate between the ability to build a rich classification scheme and actually deploying one at a scale larger than needed. I like the generality of the WordNet metamodel, but that doesn’t mean I want or need to load all 170,000+ entries into my graphs.

As far as a use case is concerned, I’m thinking of knowledge management in the typical SME professional practice, particularly law. There is, I think, a bright future in this area for tools like Logseq by virtue of the fact that they are text based and can readily be distributed using Git or the like. For an industry that purportedly relies on knowledge, “knowledge management” remains pretty problematic. I have experienced enough failures to appreciate that the biggest problem with current systems is that of “not knowing what you know”, a problem that grows with time and scope. Put another way, discovery is a key issue.

Systems like SharePoint and its ilk allow one save documents in folder hierarchies and to build controlled and uncontrolled vocabularies with which to classify (i.e. to tag) them, but, at least in my opinion, they in fail in two ways. First, although not directly related to this discussion, they don’t support document linking to the degree that Logseq (or on macOS, Hook) does. Second, which is relevant, educating users into how taxonomies are structured and how they should be used is a real difficulty and making them do it consistently even more so. What makes it so difficult is the “discount rate” we apply to our time. How much effort am I prepared to invest now so that you can find something later, perhaps years later. For most people indexing as you enter is an adjunct to their role, it’s just not worth a significant investment of their time, especially when you’re being evaluated on the basis of billable hours. For academics and researchers that’s clearly not the case, their role is to link, classify, assimilate and derive, but I would suggest that for the majority of knowledge workers the value to them of future discovery by others is rather small.

I’m my mind the trick to knowledge discovery is to make life as easy as possible for those entering information. Don’t try to force users into sticking to a rigidly defined vocabulary; don’t get in their way; by all means guide them (say with properties), but accept anything that they think appropriate.

Generally the difficult question is something along the lines of “what do we know about X? “. Here X is typically a concept, not a specific thing like the contract we wrote for some client. It’s the sort of question that library indices are designed to facilitate, albeit for physical information that is not directly searchable…

Digital information has the advantage that it can be directly searched using full text indexing. Full text searches fail, however, if the word(s) that I use to envisage X differ from those actually in the document. The same applies to tagging. The word or brief phrase that comes to my mind when I think of a concept is not necessarily that which you used when you tagged the document years ago. It is highly likely, however, that whatever word(s) I think of will be a synonym of that you actually used.

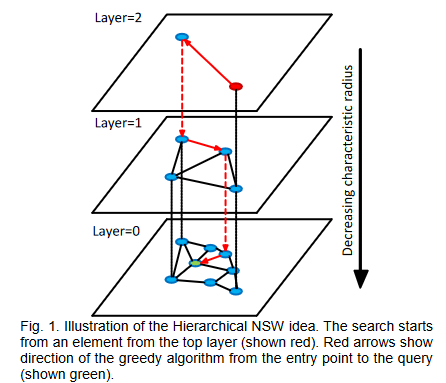

That, however, requires a means of equating terms/ phrases to concepts, which is where WordNet synsets come in. As far as hyper- / hyponyms are concerned, I view them as enabling a sense of scale, of zooming in or out. I can start with a high level concept and quickly refine a search by looking at what hyponyms have actually been used, or if I happen to start with a hyponym that hasn’t been used, pull back to a hypernym and see which of its hyponyms have been used. Other linguistic relationships provide different ways of navigating the search space.

Hope this explains better!